A Practical Guide to Porting and Interacting with AI Large Language Models from DeepSeek to Qwen

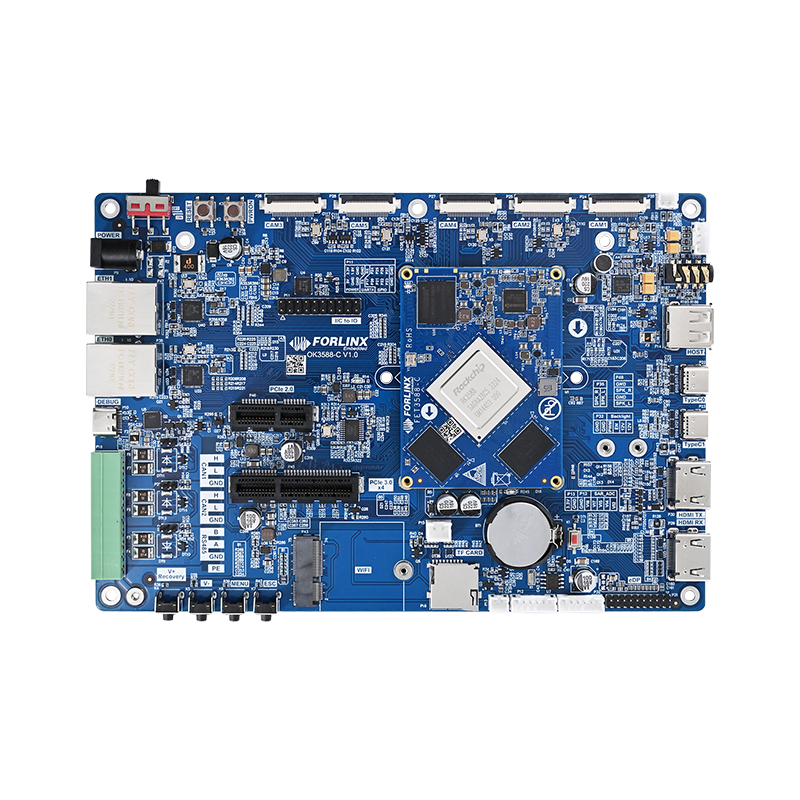

In a previously published Technical Practice | A Complete Guide to Deploying the DeepSeek-R1 Large Language Model on the OK3588-C Development Board article, the porting and deployment of DeepSeek-R1 on Forlinx Embedded's OK3588-C development board were introduced, its effects were demonstrated, and performance evaluations were conducted. In this article, in-depth knowledge about DeepSeek-R1 will not only be continued to be shared but also porting methods on various platforms will be explored and more diverse interaction methods will be introduced to help better utilize large language models.

1. Porting Process

1.1 Deploying to the NPU Using RKLLM-Toolkit

RKLLM-Toolkit is a conversion and quantization tool specially developed by Rockchip for large language models (LLMs). It can convert trained models into the RKLLM format suitable for Rockchip platforms. This tool is optimized for large language models, enabling them to run efficiently on Rockchip's NPU (Neural Processing Unit). The deployment method mentioned in the previous article was NPU deployment through RKLLM-Toolkit. The specific method of operation is as follows:

(1) Download the RKLLM SDK:

First, download the RKLLM SDK package from GitHub and upload it to the virtual machine. SDK download link:

[GitHub-airockchip/rknn-llm]( https://github.com/airrockchip/rknn-llm)。

(2) Check the Python version:

Ensure that the installed SDK version is compatible with the target environment (currently only Python 3.8 or Python 3.10 is supported).

(3) Prepare the virtual machine environment:

Install therkllm-toolkitwheel in the virtual machine. The wheel package path is (rknn-llm-main\rkllm-toolkit).

pip install rkllm_toolkit-1.1.4-cp38-cp38-linux_x86_64.whl

(4) Download the model:

Select the DeepSeek-R1 model to be deployed.

git clone https://huggingface.co/deepseek-ai/DeepSeek-R1-Distill-Qwen-1.5B

(5) Use the sample code for model conversion:

In the path rknn-llm-main\examples\DeepSeek-R1-Distill-Qwen-1.5B_Demo, use the sample code provided by RKLLM-Toolkit for model format conversion.

python generate_data_quant.py -m /path/to/DeepSeek-R1-Distill-Qwen-1.5B python export_rkllm.py

(6) Compile the executable program:

Compile the sample code directly by running the build-linux.sh script under the deploy directory (replace the cross-compiler path with the actual path). This will generate a folder in the directory, which contains the executable file and other folders. Perform cross-compilation to generate the executable file.

Cross-compile to generate an executable file.

./build-linux.sh

(7) Deploy the model:

Copy the compiled _W8A8_ RK3588.rkllm file and the librkllmrt.so dynamic library file (path: rknn-llm-main\rkllm-runtime\Linux\librkllm_api\aarch64) together into the build_linux_aarch64_Release folder generated after compilation, and then upload this folder to the target board.

Next, add execution permissions to the llm_demofile in the build_linux_aarch64_Release folder on the target board and execute it.

chmod +x llm_demo ./llm_demo _W8A8_RK3588.rkllm 10000 10000

Demo 1

Advantages and Disadvantages:

Advantages: After being deployed to the NPU, the large language model can run efficiently, with excellent performance and less CPU resource consumption.

Disadvantages: Compared with other methods, the deployment process is a bit more complex and requires a strong technical background and experience.

1.2 One-Click Deployment to the CPU Using Ollama

Ollama is an open-source local large language model (LLM) running framework that supports running various open-source LLM models (such as LLaMA, Falcon, etc.) in a local environment and provides cross-platform support (macOS, Windows, Linux).

With Ollama, users can easily deploy and run various large language models without relying on cloud services. Although Ollama supports rapid deployment, since DeepSeek-R1 has not been optimized for the RK3588 chip, it can only run on the CPU, which may consume a relatively high amount of CPU resources. The specific method of operation is as follows:

(1) DownloadOllama:

Download and install Ollama as needed.Ollama,

curl -fsSL https://ollama.com/install.sh | sh

If the download speed is slow, you can refer to the following mirror method for acceleration.

curl -fsSL https://ollama.com/install.sh -o ollama_install.sh chmod +x ollama_install.sh sed -i 's|https://ollama.com/download/|https://github.com/ollama/ ollama/releases/download/v0.5.7/|' ollama_install.sh sh ollama_install.sh

(2) Check the Ollama results:

Confirm that Ollama is installed correctly and run relevant commands to view the deployment results.

Ollama --help

(3) Download DeepSeek-R1:

Get the instructions for downloading the DeepSeek-R1 model from the officialOllama website.

(4) Run DeepSeek-R1:

Start the DeepSeek-R1 model through the Ollama command-line interface.

ollama run deepseek-r1:1.5b

Demo 2

Advantages and Disadvantages:

Advantages: The deployment process is simple and fast, suitable for rapid testing and application.

Disadvantages: Since the model is not optimized for RK3588, running it on the CPU may lead to high CPU usage and affect performance.

Deploying Other Large Models on the FCU3001 Platform

Apart from DeepSeek-R1, Ollama also supports the deployment of other large language models, such as Qwen. This demonstrates the wide applicability of Ollama. Next, taking Qwen as an example, a large language model will be deployed on the FCU3001, an AI edge computing terminal launched by Forlinx Embedded, which is equipped with an NVIDIA processor (based on the NVIDIA Jetson Xavier NX processor).

Through its powerful computing power and optimized software support, FCU3001 can efficiently run the large language models supported by Ollama, such as Qwen. During the deployment process, the flexibility and ease-of-use provided by Ollama can be fully leveraged to ensure the stable and smooth operation of the large language model on the FCU3001. The steps are as follows:

(1) CUDA Installation:

The GPU of the NVIDIA Jetson Xavier NX can be used to run the model. Refer to the above-mentioned method for installing Ollama.

sudo apt update sudo apt upgrade sudo apt install nvidia-jetpack -y

(2) Visit the Ollama official website:

Browse other models supported by Ollama.

(3) Select a version:

Choose the Qwen 1.8B version from the list of models supported by Ollama.

(4) Run the model:

In the Ollama environment, use the command ollama run qwen:1.8b to start the model.

ollama run qwen:1.8b

Demo 3

3.Interaction Methods

In the previously mentioned deployment methods, the interaction is mainly based on serial port debugging. There is a lack of a graphical interface, so elements such as pictures and forms cannot be displayed, and historical conversations cannot be presented. To enhance the user experience, a more diverse interaction experience can be provided by integrating Chatbox UI or Web UI.

3.1 Chatbox UI

Chatbox is an AI assistant tool that integrates multiple language models and supports various models such as ChatGPT and Claude. It not only has the functions of local data storage and multilingual switching but also supports image generation and formats like Markdown and LaTeX, providing a user-friendly interface and team collaboration features. Chatbox supports Windows, macOS, and Linux systems. Users can quickly interact with large language models locally. The steps are as follows:

(1) Download Chatbox:

Download the appropriate installation package from the official Chatbox website (https://chatboxai.app/zh).

(2) Install and configure:

After the download is complete, get a file named Chatbox-1.10.4-arm64.AppImage, which is actually an executable file. You can run it after adding permissions, and then you can configure the LLM model under the local ollama API.

chmod +x Chatbox-1.10.4-arm64.AppImage ./Chatbox-1.10.4-arm64.AppImage

(3) Q&A dialogue:

Users can communicate with the model through an intuitive graphical interface and experience a more convenient and smooth interaction.

Demo 4

3.2 Web UI

Web UI provides a graphical user interface through web pages or web applications, enabling users to easily interact with large language models via a browser. Users only need to access the corresponding IP address and port number in the browser to ask real-time questions. The steps are as follows:

(1) Set up the Web UI environment:

Configure the environment required for Web UI. It is recommended to use Python 3.11 for Web UI. So, use Miniconda to create a virtual environment with Python == 3.11.

Install Miniconda

wget https://repo.anaconda.com/miniconda/Miniconda3-latest-Linux-aarch64.sh chmod +x Miniconda3-latest-Linux-aarch64.sh ./Miniconda3-lates

Set up theWeb UIenvironment.

conda create --name Web-Ui python=3.11 conda activate Web-Ui pip install open-webui -i https://pypi.tuna.tsinghua.edu.cn/simple

(2) Start the Web UI:

Use ''open-webui serve'' to start the Web UI application. The IP address and port number of the server are 0.0.0.0:8080.

open-webui serve

If the information in the following red box appears, it means the startup is successful.

(3) Access the Web UI:

Enter the IP address and port number in the browser to open the Web UI interface and start interacting with the large language model.

Register an account

Demo 5

4. Summary

This article comprehensively demonstrates various transplantation methods of large language models on the OK3588-C development board and the FCU3001 edge AI gateway, and introduces how to enhance the user experience through multiple interaction methods such as Chatbox UI and Web UI.

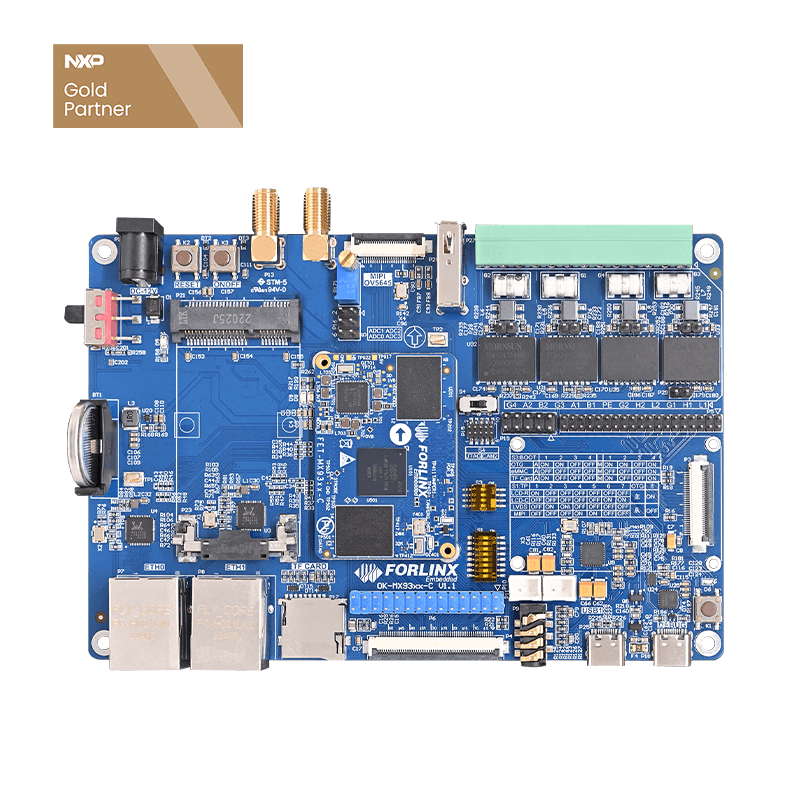

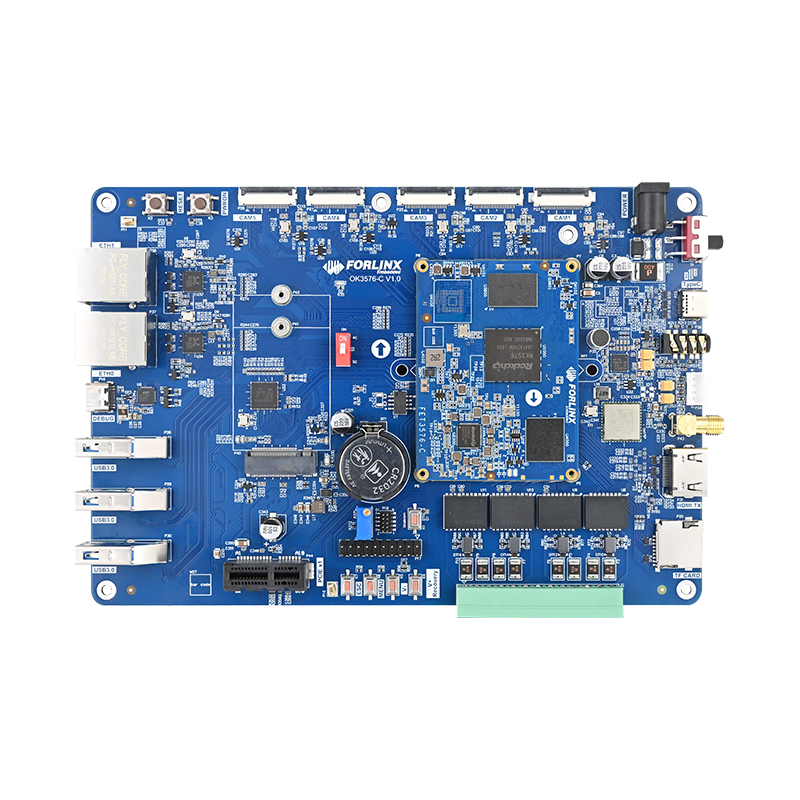

Forlinx Embedded has launched multiple embedded AI products, such as development boards like OK3588-C, OK3576-C, OK-MX9352-C, OK536-C, and the AI edge computing terminal FCU3001. The computing power ranges from 0.5 TOPS to 21 TOPS, which can meet the AI development needs of different customers. If you are interested in these products, please feel free to contact us. Forlinx Embedded will provide you with detailed technical support and guidance.